Top 10 Data Warehouse Concepts That Every Analyst Must Know: A Comprehensive Guide

In 2023, it is projected that the global data warehouse as a service market may reach $5,484.9 million in sales. By 2033, demand is likely to expand at a compound annual growth rate of 22.4% and reach a value of $41,511.6 million. Whether you are a seasoned professional or a new joiner, understanding these concepts empowers you to harness the power of data analysis, data modeling, ETL processes, and data integration for effective decision-making. We dive into the top 10 data warehousing concepts in this blog.

1. Data Sources

A data source is the origin point of the data. Hence, it is the first digitized or physical form of data. A data warehouse draws from diverse sources like databases, flat files, live device measurements, web-scraped data, or various online static and streaming services. For instance, in a global weather forecasting app, weather stations worldwide act as data sources, supplying real-time temperature data.

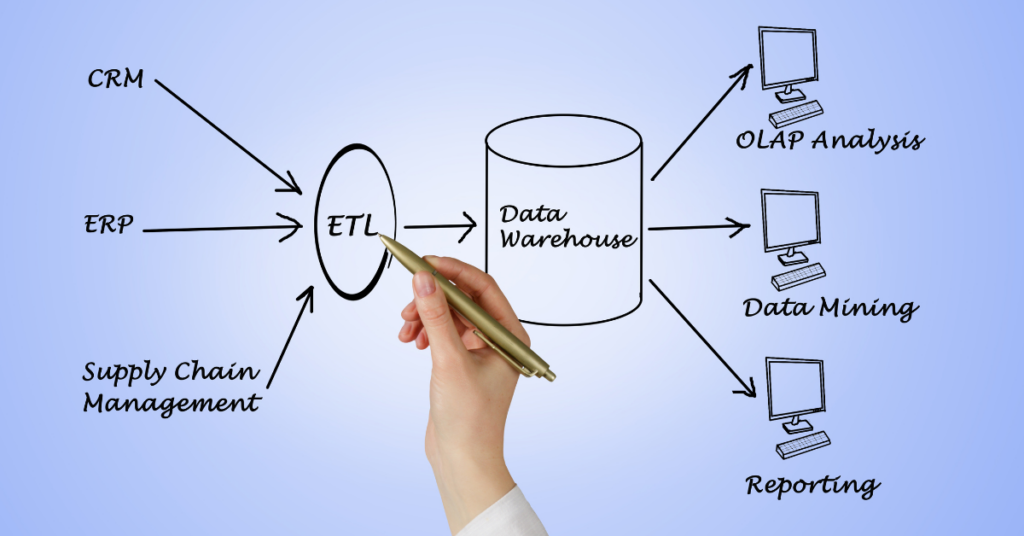

2. Data Integration

Data integration combines data from diverse sources, like point of sale, inventory, and customer relationship management systems, into a data warehouse. The process involves extracting, transforming, and loading data to ensure consistency and quality. This method enables organizations to analyze and report on data more efficiently, fostering a holistic view of operations as well as customer behavior.

3. Data Transformation Tools

Data transformation tools are essential in preparing data for use in data warehouses. These tools typically handle tasks such as cleaning, validating, standardizing, integrating, enriching, and mapping data. They are designed to work with data of varied formats from different sources, ensuring the data is accurate and reliable for the warehousing process. Notable features of these tools include user-friendly interfaces, automation capabilities, and scalability to handle large data sets efficiently.

4. Data Storage

In a data warehouse, structured data models organize data for optimized analysis and reporting. Unlike traditional databases, data warehousing employs a dimensional data model, offering a more user-friendly approach to querying. Separating analytical from operational data enhances performance for both tasks. Thus, efficient data storage is crucial, ensuring organized data for insights.

5. Data Retrieval

Accessing data is a fundamental component of data warehouse concepts. SQL, as the standard language for database management and querying, is integral to data retrieval in data warehouses. It serves as the foundation for technical query tools, enabling developers to craft and test queries for precise data manipulation. Furthermore, SQL is crucial for reporting tools, facilitating the extraction, aggregation, and analysis of data for comprehensive reports. It also supports cross-tabular querying, vital for summarizing data across various dimensions. For end users, SQL underpins user query tools, offering a visual interface for report creation without direct SQL coding. Advanced reporting tools further utilize SQL for complex data access and sophisticated querying. Overall, SQL connects the technical and user-friendly aspects of data querying and analysis in data warehouses.

6. Metadata Repository

A metadata repository is a database or other storage mechanism that is used to store metadata about data. It systematically organizes and enhances data accessibility, covering content, format, and also structure. Suited to organizational needs, these repositories play a vital role in libraries, online platforms, and data governance. They also improve search engine rankings, ensure data interoperability, and support data preservation and visualization.

7. Data Mart

Metadata, an essential yet frequently undervalued component of a data warehouse, acts as a comprehensive guide, providing detailed information about various elements within the data warehouse. It includes insights into database objects, security protocols, and ETL (Extract, Transform, Load) processes. The development of a metadata repository is key for effectively managing the data warehouse. It helps in resolving relationships and discrepancies between different data elements. Specifically, metadata aids in identifying and clarifying differences among various entities, and it plays a crucial role in combining data from diverse sources.

There are three main categories of metadata:

- Business metadata includes user-friendly definitions and descriptions of data, making it easier for business users to understand and interact with the data warehouse

- Technical metadata involves detailed information about data sources, data models, data lineage, and transformations applied during the ETL process

- Operational metadata captures run-time details such as data load times, execution logs, and backup schedules, crucial for operational management and performance monitoring

In sum, metadata offers a foundation for enhancing control and understanding of the data warehouse, facilitating smoother development and management processes.

8. Data Visualization

Data visualization is crucial for conveying the significance of data in a visual context, unveiling patterns and correlations. Visualization refers to either visual reporting or visual analysis. Visual reporting employs charts and graphics to showcase business performance, allowing analysts and business leaders to drill down for detailed insights. On the other hand, visual analysis empowers them to explore and discover insights with high interactivity, incorporating forecasting and statistical analysis.

9. Data Governance

Managing the availability, usability, integrity, and also security of enterprise data constitutes data governance. Its effectiveness is pivotal for successful data warehousing programs, ensuring consistent and efficient data management organization-wide. Effective data governance guarantees data is handled uniformly and securely across the entire organization, promoting overall success. Protecting sensitive information involves measures like access controls, encryption, and authentication for data integrity and also confidentiality.

10. Big Data

Big data has diverse and dynamic data sets that drive financial growth as well as align with diverse business objectives. It fulfills the need for real-time applications, reduces costs through open-source tech, and also enhances customer relationships. Big data is of two types: online big data, seen in real-time apps like MongoDB, and offline big data utilized in batch-oriented applications with processes like ETL and business intelligence tools.

ALSO READ: Latest Big Data Technologies That are Ruling the Roost in 2023

Frequently Asked Questions About Data Warehouse Concepts

1. How Does Data Modeling Play a Role in Effective Data Analysis?

Data modeling is the architectural backbone of data warehouse concepts, providing a visual representation of data structures and relationships. Here’s how it contributes to effective data analysis:

- Enhances end-user access to diverse enterprise data

- Increases data consistency

- Provides additional data documentation

- Potentially lowers computing costs and also boosts productivity

- Creates a flexible computing infrastructure adaptable to system as well as business changes

ALSO READ: Your Ultimate Guide to the Top 12 Data Analyst Career Paths

2. What is the ETL Process?

ETL, which stands for Extract, Transform, Load, encompasses the essential stages of data integration in the realm of data warehouse concepts. It begins with the Extract phase, where a diverse range of data is pulled and subsequently prepared for analytical use. The Transform stage involves thorough cleansing and restructuring to ensure the data is analytically suitable. Finally, the process concludes with the Load phase, where the transformed data is deposited into the warehouse, rendering it readily accessible for reporting and querying purposes.

3. Why is the ETL Process Important in a Data Warehouse?

The ETL process is crucial in a data warehouse because it integrates data from diverse sources, ensures data quality through cleansing and validation, optimizes query performance, maintains historical data, and supports data transformation to meet specific business needs. Additionally, ETL processes enhance data security, consistency, and scalability, while automation reduces manual effort and errors, making data warehouses reliable sources for informed decision-making and analytics.

ALSO READ: Anomaly Detection: Why It’s Important for Data Scientists

In conclusion, data warehouse concepts, including ETL processes, data modeling, and reporting, are the foundation of effective data analysis. Elevate your data analysis skills with Emeritus’ comprehensive data science courses. Explore data warehouse concepts, master ETL processes, and delve into data modeling and reporting. Unlock new opportunities for your career and take the first step toward a brighter future today.

Write to us at content@emeritus.org