Here are Top 5 Proven Linear Algebra Applications in Machine Learning

The history of machine learning (ML) dates back to the mid-20th century, but it was only a theoretical concept back then. The concept has evolved into a transformative force today. It allows machines to use data and algorithms to learn like humans with the help of ML and consequently improve their accuracy. There is a growing trend among Indian businesses to incorporate ML in their operations. It is essential to become familiar with linear algebra— a fundamental mathematical concept crucial in ML. There are several linear algebra applications in machine learning because ML algorithms depend on the concept, especially for numerical computations. So, let’s look at linear algebra basics that every ML enthusiast should know to hone their understanding of ML models.

In this blog, we will be discussing:

- Basic Concepts of Linear Algebra

- Matrix Operations

- Eigenvalues and Eigenvectors

- Singular Value Decomposition (SVD)

- Linear Algebra in Machine Learning

1. Basic Concepts of Linear Algebra Applications in Machine Learning

A. Scalars, Vectors, and Matrices

1. Definition and Representation of Scalars, Vectors, and Matrices

In linear algebra for data science & machine learning in Python, among other things, we use scalars, vectors, and matrices as fundamental mathematical entities to represent data. Let’s take a look into them:

1. Scalars

A scalar is a single numerical quantity represented by a real or complex number. It has magnitude but no direction. For example, speed, temperature, and volume. Lowercase letters such as a, b, and c generally denote a scalar.

2. Vectors

A vector, as opposed to a scalar, has both magnitude and direction. It often represents quantities like acceleration, momentum, and force. A vector uses bold lowercase letters in its representation. We denote a three-dimensional vector as v = [v₁, v₂, v₃] in which v₁, v₂, v₃ are components of the vector.

3. Matrices

A matrix is a rectangular array of numbers, symbols, or expressions. It represents and manipulates data with multiple dimensions. We use square brackets for matrices. For example, a 2×3 matrix shows up as M = 211122122313

B. Vector Spaces

1. Introduction to Vector Spaces and Their Properties

It is crucial to understand vector spaces and their properties to learn linear algebra. A vector space, L, over a field, F, is a set of elements concerning two operations— vector addition and scalar multiplication. Here are a few properties of vector spaces:

- Commutativity: It involves an operation where the order of the vectors does not affect the result. For instance, a + b = b + a for all vectors a and b in L.

- Distribution: It stipulates that scalar multiplication distributes over vector addition and scalar addition. n(a + b) = na + nb; (n +o)b = nb + ob

- Closure: If a is a vector in L and n is a scalar in F, then an is also in L.

- Dimension: It refers to the number of vectors in a basis for the vector space.

2. Explanation of Linear Independence and Basis Vectors

Linear Independence: Vectors are linearly independent if no vector in a set can be written as a linear combination of the others. It is, thus, essential to form a basis for a vector space.

Basis Vectors: A basis is a set of linearly independent vectors that span the entire vector space. In other words, any vector in L can be expressed as a linear combination of the basis vectors.

In conclusion, linear independence and basis vectors are fundamental to understanding linear algebra, particularly in vector spaces.

ALSO READ: Machine Learning and its Applications

2. Matrix Operations

A. Matrix Addition and Subtraction

1. Description of Matrix Addition and Subtraction

It is important to understand matrix addition and subtraction before we deal with linear algebra applications in machine learning. In addition, we take elements that are at the same index from each matrix and add them up one at a time. It requires matrices to be of the same dimension if they are to be added. For example, we add 8 with 2 and 4 with 5 in the following:

6894 + 3275 = 09101609

The process is the same in the case of subtraction. So, we subtract the elements from the second matrix from the elements in the first.

B. Matrix Multiplication

1. Explanation of Matrix Multiplication

The process takes a pair of matrices and produces another matrix. We combine elements of the matrices in a specific way. The dimensions of the resulting matrix are determined by the number of rows in the first matrix and the number of columns in the second matrix. Hence, the number of columns in the first matrix must equal the number of rows in the second matrix to multiply two matrices together.

6894 x 3275 = 452410560

2. Use Cases and Applications in Machine Learning

The application of linear algebra in machine learning is incomplete without matrix addition, subtraction, and multiplication. Here are a few instances where linear algebra is used in machine learning:

- Data Normalization: We use matrix addition to subtract the mean from each data point to normalize data.

- Error Calculation: The use of subtraction allows us to calculate the error between predicted and actual values, particularly in training models.

- Recommendation Systems: The use of matrix multiplication in collaborative filtering permits the prediction of missing values in recommendation systems by multiplying user-item interaction matrices.

C. Transpose and Inverse

- Definition and Properties of Matrix Transpose and Inverse

We obtain the transpose of a matrix by swapping its rows with columns, whereas a square matrix can have an inverse. We raise a matrix to the power of -1 to denote the inverse. Furthermore, a matrix times its inverse equals its identity matrix (I). Here are some of the properties:

- The matrix, A, has an inverse (A-1) if and only if the determinant of the matrix does not equal zero.

- If A is an invertible matrix, then A-1 × A = A × A-1= I.

- The transpose of the inverse of a matrix is equal to the inverse of the transpose of the matrix.

2. Applications and Importance in Machine Learning

Many concepts like matrix transpose and inverse are useful for linear algebra applications in machine learning. Here are a few:

- Linear Regression: When solving the standard equation to find the optimal parameters that minimize the least squares error, we frequently use the transpose of a matrix.

- Optimization: Many techniques in machine learning, such as gradient descent, use matrix operations to solve optimization problems by finding the minimum or maximum of a cost function.

- Graph and Network Analysis: Many machine learning applications feature graphs and networks. It is common for developers to use matrix operations to analyze the properties and dynamics of graphs.

3. Implementation in Python

NumPy facilitates the use of matrix operations and linear algebra in Python. The programming language uses every concept in linear algebra, from transpose to inverse, among other things. Let’s look at an illustration provided by Google Bard:

Transpose:

Inverse:

ALSO READ: How to Successfully Implement Machine Learning in Business: Top Tips

3. Eigenvalues and Eigenvectors

A. Definition and Properties

1. Introduction to Eigenvalues and Eigenvectors

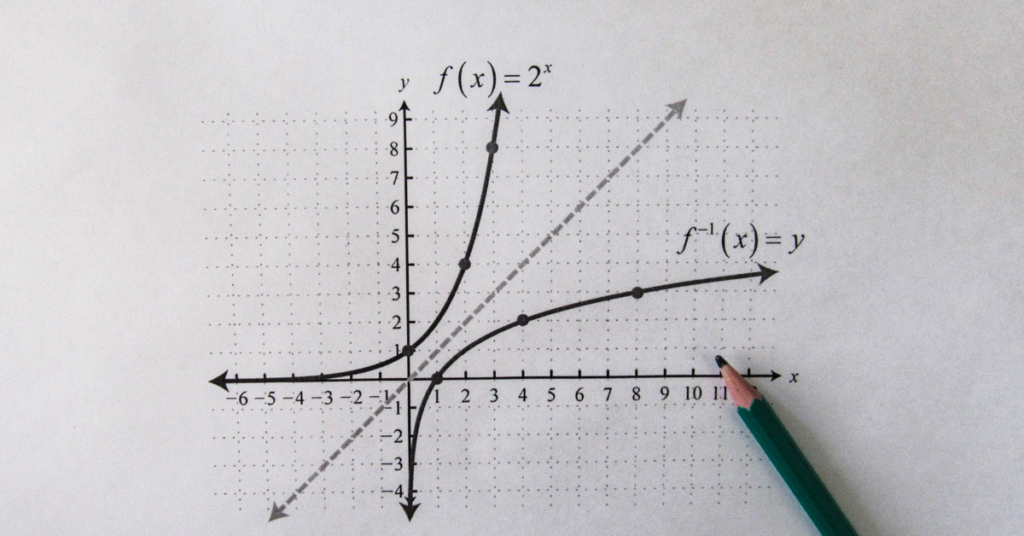

These fundamental concepts contribute significantly to linear algebra applications in machine learning. In a linear transformation, an eigenvalue actively associates with a scalar value, while eigenvectors actively decrease the number of dimensions of the data. In other words, multiplying an eigenvector by its corresponding eigenvalue results in a scaled version of the original eigenvector.

An eigenvalue can be a real or a complex number. The determinant of a square matrix is equal to the product of its eigenvalues. However, eigenvectors are not unique; any scalar multiple of an eigenvector is also an eigenvector. Eigenvectors corresponding to distinct eigenvalues are linearly independent.

2. Understanding the Geometric Interpretation

The geometric interpretation of eigenvalues and eigenvectors is equally important to understanding linear algebra applications in machine learning. It is a way to visualize their meaning, so let’s take a look:

- Imagine a linear transformation represented by a matrix A. This transformation can be seen as stretching, shrinking, and rotating vectors in space. Eigenvectors represent the directions in which the transformation does not cause any rotation, only stretching or shrinking.

- The eigenvalue λ associated with an eigenvector v tells us how much the vector is stretched or compressed by the transformation. Eigenvalues represent the “magnitudes” of the transformation along the eigenvector directions. They tell us how much a vector gets stretched or shrunk in those directions.

ALSO READ: Top 15 Machine Learning Algorithms Every Data Scientist Must Know

B. Calculation and Use Cases

1. Methods for Calculating Eigenvalues and Eigenvectors

In linear algebra for data science & machine learning in Python, there are several methods for calculating eigenvalues and eigenvectors. Here are some of them:

- Power Iteration: This iterative method starts with an initial guess vector and repeatedly applies the matrix to the vector. In short, it only finds the dominant eigenvalue and its corresponding eigenvector.

- QR Algorithm: The method is based on QR decomposition and is popular for large matrices. It is generally used to find all eigenvalues and eigenvectors of a matrix simultaneously.

- Numerical Libraries: We can also use numerical libraries in Python, like NumPy and SciPy. This is because they provide built-in functions for calculating eigenvalues and eigenvectors. They are often based on the QR algorithm or other efficient methods.

2. Importance in Dimensionality Reduction and Principal Component Analysis (PCA)

Eigenvalues and eigenvectors play a pivotal role in dimensionality reduction techniques like Principal Component Analysis (PCA). They are also useful in the application of linear algebra in machine learning.

- Identify Important Directions: In PCA, eigenvectors reduce the dimensionality of data by identifying the most important directions of variance.

- Efficient Computation: They are often computationally efficient compared to other dimensionality reduction techniques, making them a valuable tool for handling large datasets.

- Visualization and Interpretation: Eigenvectors allow data visualization in low-dimensional space This helps us gain insights into relationships between data points and identify cluster patterns.

3. Python Implementation

We have already discussed how using a library like NumPy is preferable since it is the most efficient Python method. Let’s take a look at how to calculate eigenvalues and eigenvectors.

NumPy

QR Algorithm

ALSO WATCH: The Road to Cracking Data Science Interviews By Nithesh Baheti

4. Singular Value Decomposition (SVD)

A. Overview of SVD

1. Definition and Significance in Linear Algebra

SVD, or Singular Value Decomposition, decomposes a matrix into three matrices. They are as follows:

- Left orthogonal matrix, whose columns are the left singular vectors

- Diagonal matrix, whose diagonal entries are the singular values, ordered from largest to smallest

- Transpose of a right orthogonal matrix, whose columns are the right singular vectors

It is significant in places where linear algebra is used in machine learning algorithms. It is also essential in dimensionality reduction, least squares solutions, and image processing, among other things.

2. Application in Machine Learning and Data Compression

SVD is useful in collaborative filtering as it allows ML algorithms to recommend items to users. It does this based on their past behavior and the behavior of similar users. In fact, SVD helps identify latent factors that represent user preferences and item characteristics.

SVD is also used in image, text, and signal compression. It compresses images by discarding less significant singular values and vectors. Furthermore, it compresses text and signals by removing redundant words, phrases, noise, and unwanted components.

ALSO READ: Thrive as a Data Scientist in India With These Top 7 Skills

B. SVD Implementation in Python

1. Step-by-Step Explanation of SVD Implementation Using Python Libraries

- Import libraries such as NumPy and SciPy

- Define the matrix you want to decompose

- Perform SVD, which will return three functions as discussed above

- Access SVD components

- Left Singular Vectors

- Singular Values

- Right Singular Vectors

- Matrix reconstruction

- Dimensionality reduction (optional)

There is an option to reduce dimensionality by truncating singular values and keeping only the largest ones.

- SVD applications

Now, you can use SVD for specific purposes, such as image compression, feature extraction, and collaborative filtering.

ALSO READ: Use Cases for Supervised Machine Learning

5. Linear Algebra in Machine Learning

A. Regression and Optimization

1. How Linear Algebra is Used in Regression Models

There are several use cases for linear algebra in regression models. It is used to represent data and relationships with the help of matrices and linear transformations. Linear algebra also provides a mathematical framework to solve optimization problems. Its techniques are also used for solving regression coefficients. Lastly, linear algebra provides the foundation for various statistical tests to assess the significance of regression models and their parameters. In essence, using linear algebra brings efficiency, accuracy, and uniformity to regression models.

2. Optimization Techniques Using Linear Algebra

The benefit of optimization is vital to linear algebra applications in machine learning. So, let’s look at a few techniques used for optimization:

- Simplex Method: The method is prevalent for linear programming and uses linear algebra operations like matrix inversion and Gaussian elimination. They help identify optimal solutions and move towards them subsequently.

- Gradient Descent: It is an iterative algorithm that minimizes the objective function by moving toward the steepest descent. Linear algebra provides the framework for calculating the gradient, which guides the descent’s direction.

- Lagrange Multipliers: This approach introduces Lagrange multipliers to incorporate equality constraints into the objective function. Furthermore, linear algebra manipulates the resulting system of equations and solves for the optimal solution.

ALSO READ: 5 Top Use Cases to Understand What is Python If-Else Statement

B. Neural Networks

1. Role of Linear Algebra in the Structure and Training of Neural Networks

Linear algebra is crucial in neural networks, which are critical for machine learning. They draw their network architecture, consisting of matrices, vectors, and linear transformations. Additionally, their training depends on techniques like loss functions, gradient descent, and backpropagation. The structure of networks often depends on activation functions like linear activation and softmax function. They are also using techniques such as gradient descent, momentum, etc. Lastly, SVD is used for dimensionality reduction and regularization.

2. Backpropagation and Matrix Calculus

Backpropagation is an optimization algorithm that trains neural networks by minimizing a given loss function. It involves computing the gradients of the loss concerning the network parameters and updating these parameters to minimize the loss overall.

Matrix calculus is a mathematical framework that extends traditional calculus to deal with matrices and vectors. Matrix calculus markedly simplifies the expression of gradients and derivatives in the context of neural networks.

ALSO READ: Here’s Why Use Python for Data Science

Learning With Emeritus

The world is evidently on its way to adopting data science on a vast scale. Hence, you must upskill to stay ahead of the curve in the job market. Emeritus’ suite of data science courses is designed to help your CV stand out. Moreover, the courses are curated by experts with first-hand domain knowledge, ensuring that you only obtain relevant insights. Sign up with Emeritus today to start your tech journey with a partner who wants to help you succeed in your career!

Write to us at content@emeritus.org